Pivoting to AI will have serious implications for fiduciary responsibilities. Companies, family offices, and all advisors must strike an appropriate balance between human expertise and technological advancement. It is imperative that AI users encourage critical evaluations of AI-generated results with independent human thinking. The failure to do so can be catastrophic for everyone involved.

Tools that quickly analyze vast amounts of data, offer insights, manage risk and provide other assistance are everywhere. Anyone who sells personalized and human-centric approaches–including attorneys!– must be prepared to “analyze the analysis.” Ensuring AI-driven insights make common sense and comply with relevant laws does more than enhance the fulfillment of fiduciary duties. It is the epitome of fulfilling fiduciary duties of loyalty and care.

This instruction is not limited to higher ranking officers and directors. Everyone within an organization needs to fulfill their duty to perform their jobs and tasks in a commercially reasonable manner. This includes understanding the underlying algorithms, mitigating biases in data inputs, and interpreting AI-generated outputs in their business, ethical and legal contexts. It also means that a high priority must be placed on nuances that machines can never catch.

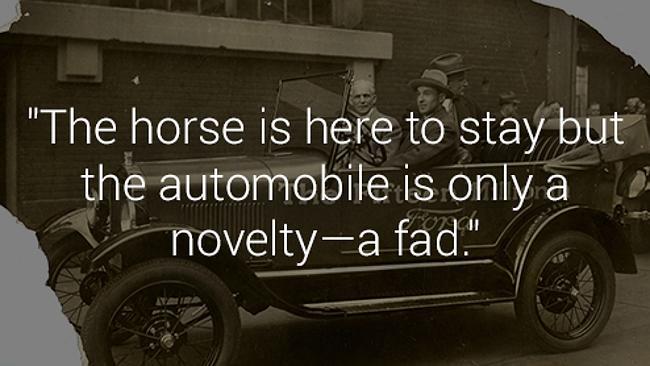

Common sense dictates AI will never capture the human elements of decision making because AI models are (a) designed to please the user and (b) susceptible to making errors or hallucinating facts. It is equally obvious that human nature will result in overreliance on AI for a variety of reasons ranging from expediency to laziness. Too many people are already copying a presentation, agreement, or any other document into ChatGPT instead of taking time to do their jobs properly–the amount of trade secrets and financial information that has been shared is frightening.

As the dollar value, sensitivity and complexity of matters increases, the ramifications of not requiring human intuition and experience analyze AI-created results increases. At some point, the failure to act properly is intentional and not negligent and, therefore, proving liability becomes much easier.

Yet failing to use AI can also create problems. AI enables people to make more informed decisions, reduce their errors, and increase their efficiency. By having AI perform routine tasks, we can focus on more complex and value-added tasks.

Therefore, embracing AI cannot be done at the expense of human judgment and skills. Use your own intelligence to ensure this does not happen before you are defending a lawsuit or your employment.

David Seidman is the principal and founder of Seidman Law Group, LLC. He serves as outside general counsel for companies, which requires him to consider a diverse range of corporate, dispute resolution and avoidance, contract drafting and negotiation, and other issues. In particular, he has a significant amount of experience in hospitality law by representing third party management companies, owners, and developers.

He can be reached at david@seidmanlawgroup.com or 312-399-7390.

This blog post is not legal advice. Please consult an experienced attorney to assist with your legal issues.

Photo credit: www.lifeboat.com